This year is coming to an end (2023 if you're reading this in the future), and ahead lots of questions raised this year about generative AI will resonate over the tech industry.

We had enormous advances in text, image and video generation (now by the end of the year this kind of explodes in new companies), and this brings to us lots of questioning about the future of jobs, copyright issues and of course the role of humans in the future.

Rest assured, I don't think this is the end of the world and we're doomed (except for some rare cases). AGI probably is far in the future, probably decades away (or even more), despite what lots of people are selling today (that AGI is imminent and stuff).

It's not just me saying this, lots of critics of Deep Learning methods explain reasons as to why AGI might not be near to being reached (today's models lack understanding of the world and other human characteristics).

What probably will happen is having some kind of AGI (rudimentary), which will almost look like AGI (because we humans are easily fooled) but it's not ... and we'll hand to it the power to conquer our world. But I think this is far in the future, decades away.

We need better methods/architectures

Said all that, one might be questioning, aren't Transformer models (like GPT) the future?

Well, I don't think so ... and again I'm not alone here:

"...For something like “human-level intelligence,” it’s clear that the architecture and learning

methods of today’s transformers have many differences from biological brains and learning

methods." Melanie Mitchell in Perspectives on the State and Future of Deep Learning - 2023

Back a few years, somewhat close to 2019 (a couple of years after the Transformer was released), I was taking a class about Affective Computing (if this got your interest, check for Rosalind Picard book Affective Computing), and my group and I thought be a good idea to conduct a Systematic Review about Brain Inspired architectures.

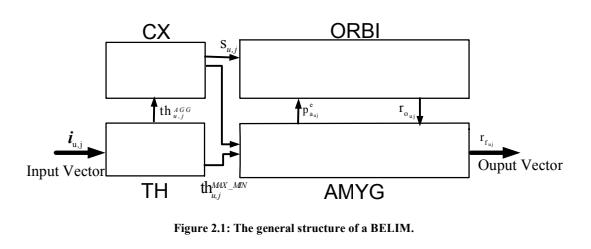

We ended up studying this and made a Systematic Review about it. At that time, architectures tried to mimic the human brain with different parts of "Deep Learning" grouped (or somewhat close to that).

Each box represents a part of the Brain ... but this doesn't show the whole picture of our brain and doesn't nearly replicate all its power.

So in the end, we need new approaches and a better understanding of information flow inside our heads. This might improve or create new methods or architectures. Until then, we might think AGI is near, but it'll be far away.

But you might be asking, that AGI doesn't need to replicate our brain to become super-intelligent, well, you're right, but without at least the same power, capacity and information flow that we have in our brain, probably AGI will never become a reality.

State of Art AGI

Nowadays, the media is telling us AGI is near, we even "need to sign" an open letter to stop AGI from becoming a reality (what a joke this was!). Don't get me wrong here, ChatGPT is great (even Google and others admit that creating their models [Bard (Google), Grok (Twitter)]), but that ain't AGI, is a super excellent computer that can do lots of things, some good enough, others that excel some humans.

And that raises lots of those questions I mentioned at the beginning of this text:

- Would AI going to steal our jobs?

- Are we doomed? AGI is here?

We as humans, don't know what intelligence is, or even worse, what consciousness is ... and how to measure it.

"...we need to be able to define and evaluate intelligence in a way that enables comparisons between two systems, as well as comparisons with humans." François Chollet in On the Measure of Intelligence

Over the year we saw lots of news saying that ChatGPT beat all sorts of human tests, getting even above human level (which rings a bell about AGI).

Well, a few researchers (including some people from HuggingFace), extrapolated that a little bit and created a new test. In this case, ChatGPT was bad, so bad, that shows us that not everything is lost and AGI is far (remember that we could be easily fooled?).

Don't believe me, check their paper GAIA: a benchmark for General AI Assistants.

But everything is not lost and those models are crappy things we should forget about.

They are still good enough for a lot of tasks, like code generation, which will bring us to our first fear: Would AI going to steal our jobs?

Programming is doomed!

When ChatGPT started to generate code at the beginning of 2023, lots of people thought that programmers would be replaced by AI (I do think that this will eventually happen, especially with AGI right?).

This seemed increasingly likely until reality proved otherwise...

Large Language Models and The End of Programming - CS50 Tech Talk with Dr. Matt Welsh

AI will enhance programmers, and we're seeing this, Copilot X is being integrated into vscode and is good, good enough to be usable.

I use it in my personal projects, and it helps a lot in boilerplate code and things that are, kind of repeatable.

For newer things, or different from the usual is not as good, and make a lot of mistakes as well.

But as I mentioned Copilot and other LLM's code generation tools, will help to improve the developer experience and code generation, this means that the companies will hire more devs to write more products and experiment with more new ideas.

I don't think that in the near future programmers will be totally replaced by AI, especially in cases where the product has too many uncertainties (which are the major cases in the industry).

But that might have its drawbacks, people will run away from those tools because they might cause harm, making us dummies. Especially for new programmers, there's already a trend telling them to not use those tools. Well, even if they don't use it to write code, they should at least use it for study (ChatGPT is good as a tutor but not as good as a human ... but for lots of people this might be good enough).

![Twitter: [/static/pages/essays/12/meme.png]](/static/pages/essays/12/meme.png)

Copyright issues

Recently NYT (New York Times) filed a process against OpenAI because ChatGPT infringes copyright regarding their articles.

From the examples shown this is a huge problem and lawyers might take advantage of this (this is not the first time some company or industry has raised such questions, we had this, Getty Images sued Stability AI because of Stable Diffusion and so on).

Gary Marcus released a good article, in which he relates the same problem ChatGPT is facing (generating copyright text) to DALL-E image generation (generating copyright images).

This discussion will get hotter in 2024, especially if NYT wins against OpenAI, this will have deep implications in the AI industry, and all models that we have today might have to be held, and retrained only without copyright text and images.

The Next Frontier: Video Generation

Looks like the next frontier to be broken by generative AI will be Video Generation, and from the middle of 2023 we're seeing lots of companies releasing their products.

We have from generating our own avatars, like this example Fabio Akita did (O que IAs podem fazer? | Exemplos de Ferramentas), to truly video generation from stale images.

Players like Stability AI: Stable Video, Runway, Kaiber and Pika are here to stay, and probably this is less problematic regarding copyright because the image animated is not generated by those platforms.

What I like to see next

Creating video is awesome, don't get me wrong ... would be cool to create a totally indie film from thin air.

The thing I really want to see is better models of TTS (Text-To-Speech).

OpenAI, release it's TTS and from my point of view is one of the best (I already tested Polly from AWS [AWS Polly] which is good enough), it has some problems when speaking Brazil Portuguese, but it's good, it has some flow in the speech and most of the time it doesn't look AI-generated.

Why I'm looking for this? You might ask ... This is needed if we want fully humanoid robots, or online avatars that could fully fool our brains into believing they are humans or AGI.

I made a test with OpenAI APIs regarding creating an online agent ... I did not implement some visuals using Make Human or Unreal's Meta Human, but this is possible right now.

We're only starting to scratch the surface of generative AI, let's see what the future holds.

Thanks, and keep dreaming of electric sheep Androids!